In forestry practice there is currently a pressing need for automated surveying and monitoring of damaged trees and forest stands. The “BeechSAT” research project was launched against this background in August 2019 at the Bavarian State Institute of Forestry (LWF). In addition to using aerial images, the project also aimed in particular to test the usability of satellite-supported sensors for damage detection purposes. The focus was on the detection of damaged dominant beech trees.

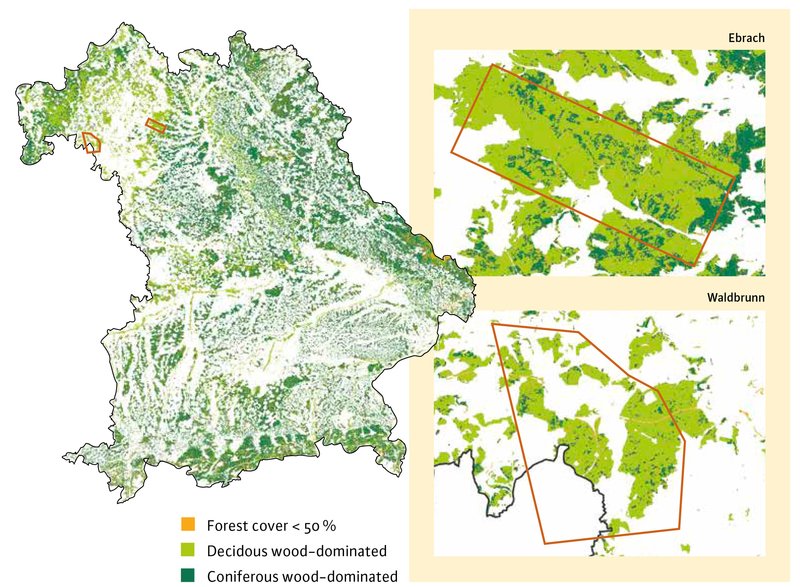

The research centred on two areas in Lower and Upper Franconia:

- The “Waldbrunn” area (size: 125 km²) is to the southwest of the city of Würzburg and comprises the Irtenberg and Guttenberg forests.

- The “Ebrach” area (size: 50 km²) includes sections of the Bürgerwald, Ebrach and Koppenwind forests.

Figure 1 shows the locations of the two study areas with a map showing deciduous wood-dominated and coniferous wood-dominated areas in the background. One important criterion for the selection of these areas was the different densities and distribution of the damaged beech trees in the two forest areas. The damage in the Waldbrunn area thus occurs over a larger area, i.e. larger, contiguous groups of trees are affected as well as individual trees, whereas the damage in Ebrach at the time of recording tended to be over small areas or affect only individual trees.

Selection of aerial and satellite image data

Aerial surveys were carried out for both project areas at the beginning of the BeechSAT research project in August 2019. The goal here was to generate stereoscopic aerial images with a high spatial resolution. These data were required in the course of the project as a reference data set for the training of the classification algorithms and to validate the satellite image data.

On the basis of the aerial image data, “true orthophotos” with a ground resolution of 0.20 m were generated at the LWF (Institute of Forestry). In comparison with classical orthophotos, the mapping of tree positions in “true orthophotos” is more accurate. Parallel to the aerial surveys, image data from different satellite systems were obtained. In the end, the following data sets were available for the investigations in BeechSAT:

- Aerial image data: 4 spectral bands with 0.20 m ground resolution

- WorldView-3: 8 spectral bands with 1.20 m and one panchromatic band with 0.30 m ground resolution

- SkySat: 4 spectral bands with 1.10 m and one panchromatic band with 0.80 m ground resolution

- PlanetScope Dove: 4 spectral banks with 3 m ground resolution

- RapidEye: 5 Spectral bands with 5 m ground resolution

- Sentinel-2: 13 spectral bands with 10 m, 20 m or 60 m ground resolution

The satellite systems listed above differ in terms of their spatial, spectral, radiometric and temporal resolution, as well as in terms of the costs of the data products available. On the basis of this data, the advantages and disadvantages of different aerial imaging and satellite imaging data could be compared. Only the Sentinel-2 data of the EU’s Copernicus Earth observation programme are available free of charge.

Machine learning for the detection of damaged trees

One key question in the research conducted in the BeechSAT project concerned the extent to which the assessment of damaged tree crowns can be automated in remote sensing data and with what level of accuracy this can take place. To answer this question, various different approaches from the field of machine learning were tested. Methods of “supervised learning” in particular were used, so that in this case, for example, a method for the semi-automatic classification of damaged and healthy trees was “trained” in advance with a manually created learning data set. This is why the learning data set is often also called a training data set. In this study, the training data consist of image samples showing both healthy and damaged tree crowns.

In the BeechSAT project, several methods of supervised learning were used, including both classical methods and deep learning methods. More follows on this in the section “Greater accuracy with deep learning”.

The spatial resolution of the image data is particularly important

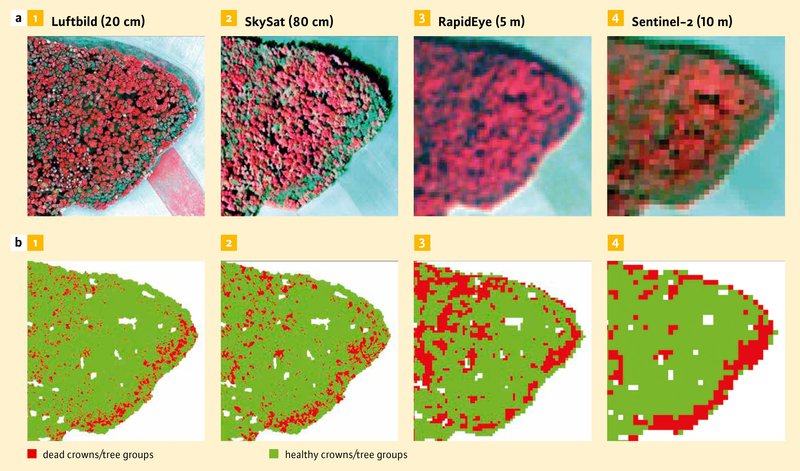

The results of the BeechSAT research project show that automated detection of damaged and dead trees using remote sensing data is possible in principle. Figure 4 shows an example of the result of a semi-automatic classification based on aerial image data and image data from various different satellites (SkySat, RapidEye and Sentinel-2) for a small section of the Waldbrunn study area. The figures in the top row show colour-infrared (CIR) representations of the remote sensing data sets. Dead trees can be identified in the CIR images by their bluish-green and greyish-white colour tones, whereas healthy tree canopies are presented in different shades of red. The lower series of images shows the respective results of a semi-automatic image classification. Here the dead tree crowns or groups of trees that were identified are shown in red.

The manual creation of the training data for the supervised image classification is the most time-consuming step in this approach. The level of accuracy that can be achieved depends on the quality of the input data. The information content of the image data must be evaluated in advance by an experienced interpreter of remote-sensing data to decide which categories can potentially be separated out in the available data.

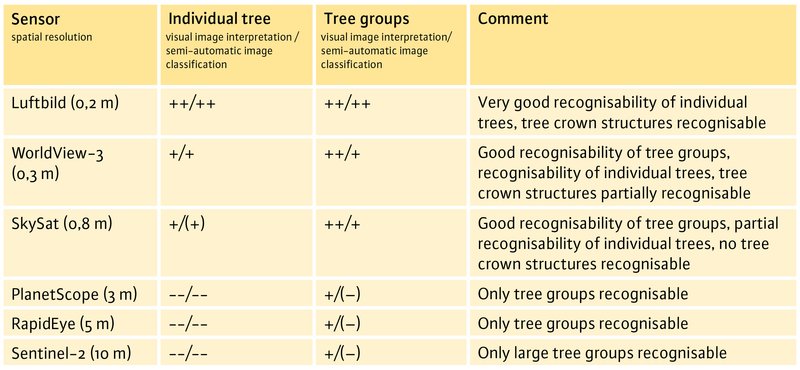

The information content of the remote-sensing data is particularly dependent on the spatial resolution of the image data. This is shown in Figure 5. Here, evaluation options for visual image interpretation and semi-automatic image classification were assessed separately for individual trees and tree groups for the remote-sensing data used in the BeechSAT project.

Aerial image data with the highest spatial resolution of the image data used offer the results for recognising damaged and dead trees. In aerial images, tree crown structures and with them “defoliation” and “crown deadwood” as characteristic damage types are clearly recognisable. Ideally, the assessment is done manually through the stereoscopic interpretation of aerial images. With automated evaluation, the highest levels of accuracy in the BeechSAT project were also achieved on the basis of aerial image data that had been ortho-rectified using the Digital Surface Model (DOM) (i.e. true orthophotos).

As far as the satellite image data is concerned, the best results were achieved with the high resolution sensors of WorldView-3 and SkySat. The very high spatial resolution of the panchromatic channel (0.3 m) of WorldView-3 comes very close to that of the aerial image data (0.2 m). As a result, training data for dead dominant trees could be obtained from the WorldView-3 image data. For all other satellite image data examined in the project (SkySat, PlanetScope, RapidEye and Sentinel-2), it was also necessary to refer to the aerial images to generate the training data.

For the automated survey of damaged and dead trees it should be noted that it is not yet possible to guarantee a reliable differentiation between dead deciduous tree crowns and dead coniferous tree crowns. A differentiation of damaged trees according to whether they are deciduous or coniferous trees would currently have to be done manually and retrospectively using aerial photographs. If necessary, the use of texture features from aerial photos could assist with an automatic differentiation between the two. This issue is to be explored in future studies.

Improved accuracy with deep learning

In comparison with the classical machine learning methods tested in the BeechSAT project (Random Forest, support vector machines and artificial neural networks), somewhat higher levels of accuracy could be achieved with the deep learning methods. The difference was greatest for the high resolution aerial image data, which seems plausible, as the deep learning methods that are used “learn” structures, textures and “edges” in the data, and can thus benefit from the high spatial resolution of the image data. Nevertheless, the use of deep learning should be weighed up on a case-to-case basis, especially given that it requires a larger training data set than conventional methods. If the training data set is large and diverse enough, though, the use of deep learning is to be recommended.

At the moment it cannot be assumed that the training data set used in BeechSAT is sufficient to allow transfer of the currently existing classification models to other study areas without re-training. To allow this, other project areas with additional remote-sensing data would have to be included. Assuming it is possible in the future to create a transferable deep learning model on the basis of an extended training data set, re-training would theoretically no longer be necessary for the application of the deep learning model in new areas.

Summary

The BeechSAT project was able to show that an automatic detection of dead trees using remote-sensing data is possible in principle. It was shown that the spatial resolution of the image data is particularly important, both for a visual interpretation of the images and for a semi-automatic evaluation. Aerial image data with the highest spatial resolution give the best results for recognising damaged trees. The comparison of the machine learning methods tested showed that slightly higher levels of accuracy can be achieved with deep learning. For the moment, no reliable differentiation can be made between dead deciduous trees and dead coniferous trees in an automatic evaluation.

Outlook: The assessment of bark beetle damage to spruce

The developments of the BeechSAT project to date are highly relevant for the potential assessment of dead or dying deciduous trees across a larger area, and for the research project IpsSAT, which follows on from BeechSAT. IpsSAT is investigating the possibilities for an automatic assessment of bark beetle damage to spruce. Its aim is to identify the reddish-brown and grey discolouration of spruce crowns using remote-sensing data. In this case too, aerial image data and data from the satellite systems WorldView-3, SkySat, PlanetScope, RapidEye and Sentinel-2 are being tested for their suitability for detecting damage. The automated classification processes of BeechSAT discussed here already provide a very good basis and are now to be adapted and further developed for the issues to be examined in the IpsSAT study.

BeechSAT was developed in a collaborative project of the LWF (Departments: Information Technology, Soil and Climate, and Silviculture and the Mountain Forest) with the company IAB GmbH, and funded by the Bavarian State Ministry for Nutrition, Agriculture and Forestry.